- AI User Researcher

Glyss

The Problem

Our team has been conducting too few qualitative interviews due to limited staff capacity, time constraints, and a low budget for external tools. We often defaulted to quantitative research, which led to assumptions, product gaps, and costly rework after deployment. We needed a scalable, resource-light way to run more user interviews without overburdening the team.

Decreasing member engagement and retention

Apps lacked empathy & personalization

Hunch & assumptions-based product roadmaps

The Idea & The Approach

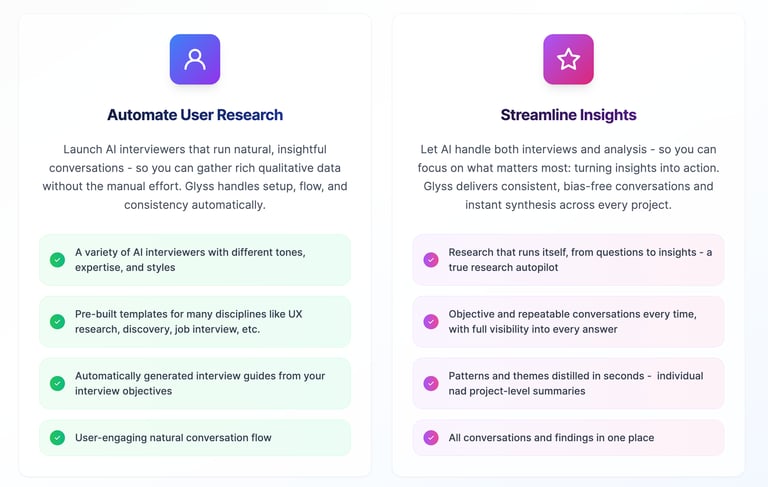

If we couldn’t scale research with people, we could scale it with AI as a moderator. Instead of manually preparing guides, scheduling interviews, and conducting them one by one, what if we created an AI-powered interviewer that could:

Generate tailored interview guides in minutes

Conduct qualitative interviews in a natural voice

Synthesize results automatically across dozens of sessions

This idea reframed the challenge: the barrier wasn’t just time but manual bottlenecks. By removing those bottlenecks with AI, qualitative research could become as fast and scalable as quantitative surveys, without losing the human touch.

I explored AI-enabled solutions and saw potential in the no-code AI app builders we had used for simpler tasks. Instead of building a complex tool internally (which was unrealistic with engineering constraints), I designed and built a custom AI-driven interview platform directly in Lovable, later integrating Claude Code to power more advanced functionality.

The Solution

The platform streamlined the entire qualitative research process:

AI Interview Guide Generation: Eliminated 5–6 hours of manual prep per research cycle by auto-generating tailored interview guides. Researchers only needed to review, not create from scratch.

Configurable AI Interviewer Personas: Set interviewer characteristics (tone, style, role) to match the research context.

Voice & Chat Interviews: Participants could engage via text or voice. ~70% preferred voice, finding it more natural and conversational.

Insights: Many interviewees preferred AI persona to a human due to feeling safe (no judgment) and being able to think longer before answering.

Automated Synthesis & Analysis: AI summarized results across dozens of interviews, highlighting themes, gaps, and emerging insights that even experienced researchers may have overlooked.

The Impact

10x scale-up: Increased interviews from ~5 per month to 45–50 within two months.

Time savings: Eliminated hours of manual prep per cycle, freeing significant team capacity for deeper analysis.

Richer insights: Automated synthesis surfaced unbiased patterns and uncovered hidden research gaps, directly shaping product direction.

Adoption: My team embraced the tool to scale (not replace) human-led qualitative user interviews, adding more gravity to the research findings.